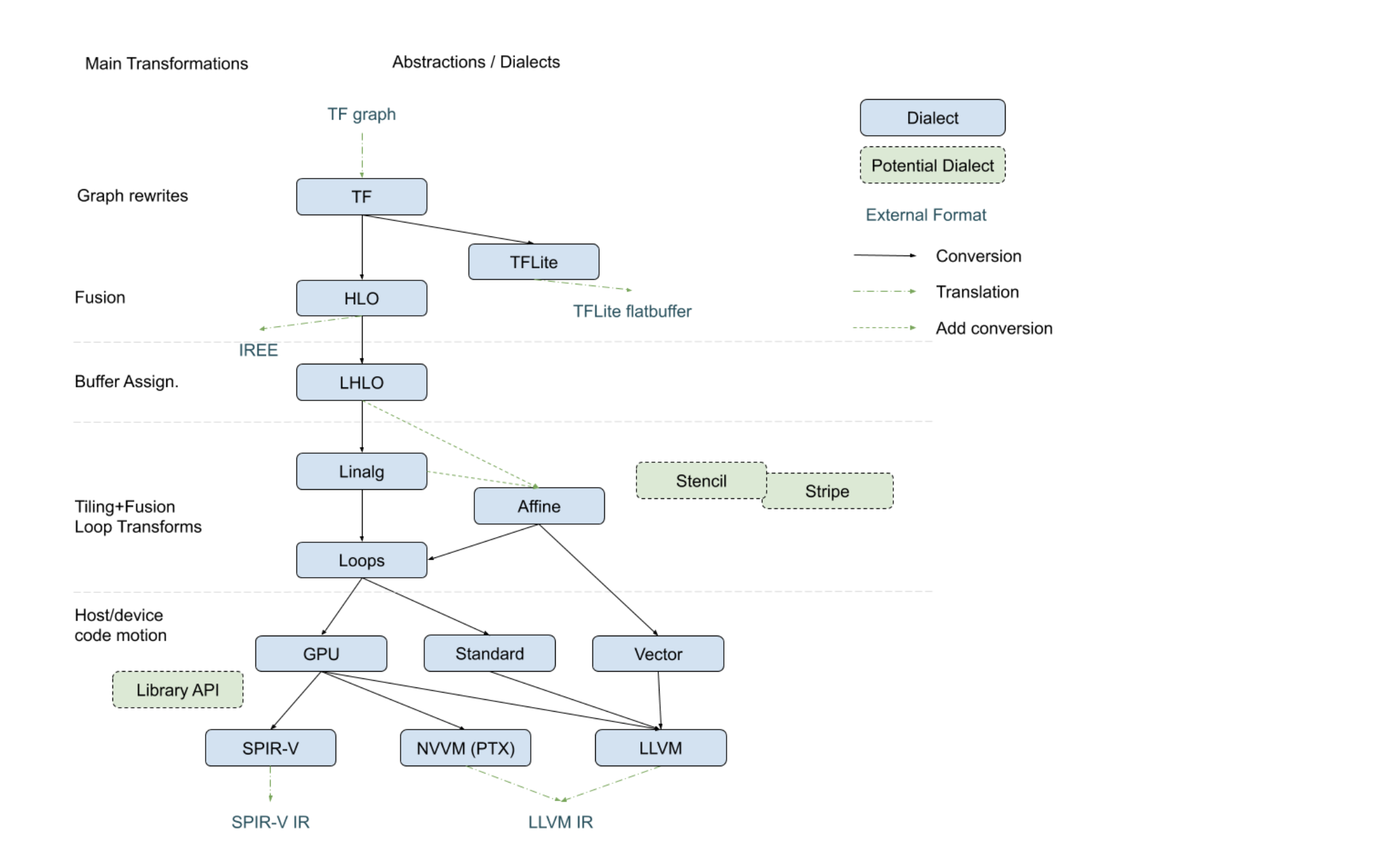

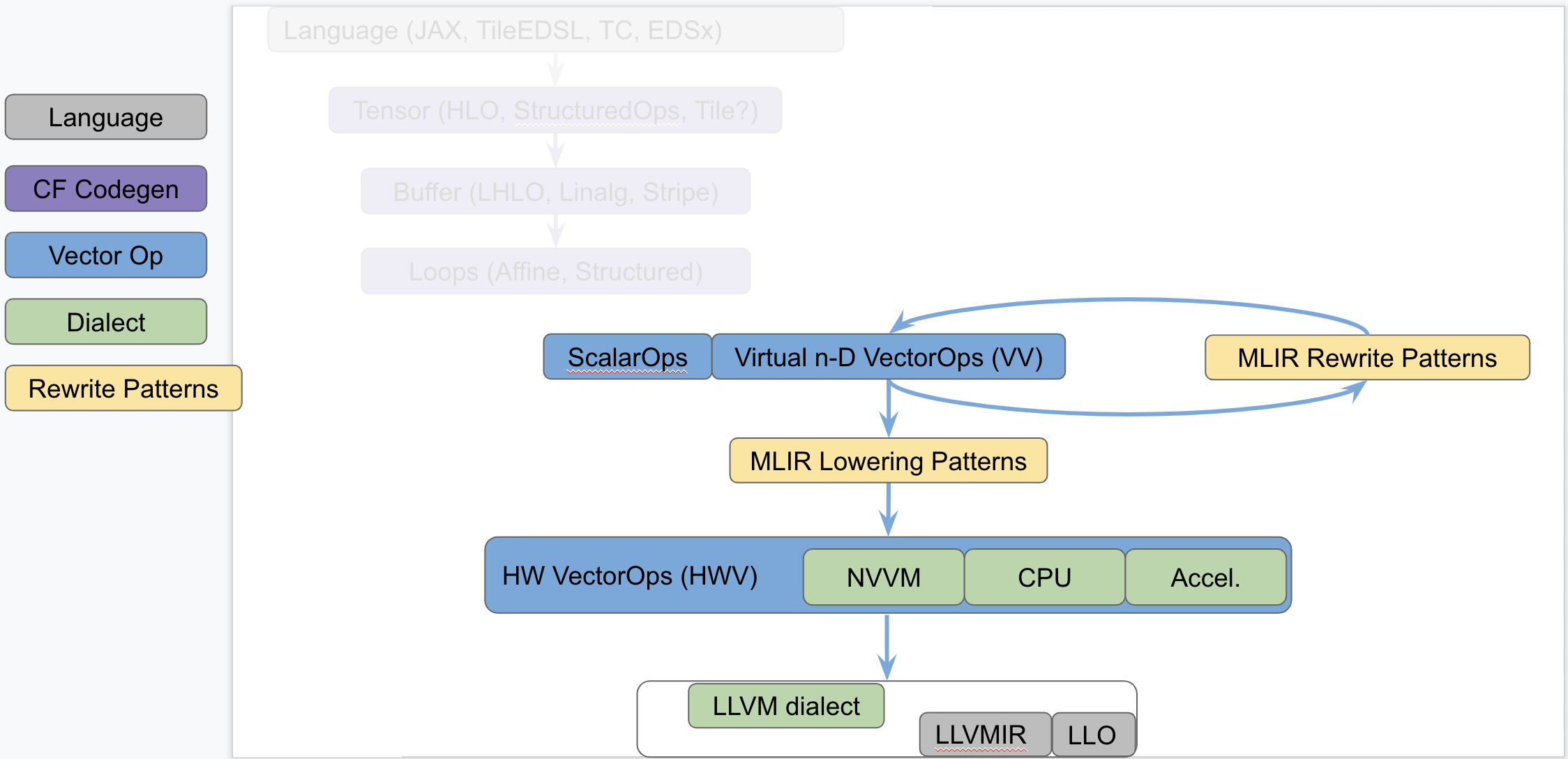

1# 'vector' Dialect 2 3[TOC] 4 5MLIR supports multi-dimensional `vector` types and custom operations on those 6types. A generic, retargetable, higher-order `vector` type (`n-D` with `n > 1`) 7is a structured type, that carries semantic information useful for 8transformations. This document discusses retargetable abstractions that exist in 9MLIR today and operate on ssa-values of type `vector` along with pattern 10rewrites and lowerings that enable targeting specific instructions on concrete 11targets. These abstractions serve to separate concerns between operations on 12`memref` (a.k.a buffers) and operations on `vector` values. This is not a new 13proposal but rather a textual documentation of existing MLIR components along 14with a rationale. 15 16## Positioning in the Codegen Infrastructure 17 18The following diagram, recently presented with the 19[StructuredOps abstractions](https://drive.google.com/corp/drive/u/0/folders/1sRAsgsd8Bvpm_IxREmZf2agsGU2KvrK-), 20captures the current codegen paths implemented in MLIR in the various existing 21lowering paths. 22 23 24The following diagram seeks to isolate `vector` dialects from the complexity of 25the codegen paths and focus on the payload-carrying ops that operate on std and 26`vector` types. This diagram is not to be taken as set in stone and 27representative of what exists today but rather illustrates the layering of 28abstractions in MLIR. 29 30 31 32This separates concerns related to (a) defining efficient operations on 33`vector` types from (b) program analyses + transformations on `memref`, loops 34and other types of structured ops (be they `HLO`, `LHLO`, `Linalg` or other ). 35Looking a bit forward in time, we can put a stake in the ground and venture that 36the higher level of `vector`-level primitives we build and target from codegen 37(or some user/language level), the simpler our task will be, the more complex 38patterns can be expressed and the better performance will be. 39 40## Components of a Generic Retargetable Vector-Level Dialect 41 42The existing MLIR `vector`-level dialects are related to the following bottom-up 43abstractions: 44 451. Representation in `LLVMIR` via data structures, instructions and intrinsics. 46 This is referred to as the `LLVM` level. 472. Set of machine-specific operations and types that are built to translate 48 almost 1-1 with the HW ISA. This is referred to as the Hardware Vector 49 level; a.k.a `HWV`. For instance, we have (a) the `NVVM` dialect (for 50 `CUDA`) with tensor core ops, (b) accelerator-specific dialects (internal), 51 a potential (future) `CPU` dialect to capture `LLVM` intrinsics more closely 52 and other dialects for specific hardware. Ideally this should be 53 auto-generated as much as possible from the `LLVM` level. 543. Set of virtual, machine-agnostic, operations that are informed by costs at 55 the `HWV`-level. This is referred to as the Virtual Vector level; a.k.a 56 `VV`. This is the level that higher-level abstractions (codegen, automatic 57 vectorization, potential vector language, ...) targets. 58 59The existing generic, retargetable, `vector`-level dialect is related to the 60following top-down rewrites and conversions: 61 621. MLIR Rewrite Patterns applied by the MLIR `PatternRewrite` infrastructure to 63 progressively lower to implementations that match closer and closer to the 64 `HWV`. Some patterns are "in-dialect" `VV -> VV` and some are conversions 65 `VV -> HWV`. 662. `Virtual Vector -> Hardware Vector` lowering is specified as a set of MLIR 67 lowering patterns that are specified manually for now. 683. `Hardware Vector -> LLVM` lowering is a mechanical process that is written 69 manually at the moment and that should be automated, following the `LLVM -> 70 Hardware Vector` ops generation as closely as possible. 71 72## Short Description of the Existing Infrastructure 73 74### LLVM level 75 76On CPU, the `n-D` `vector` type currently lowers to `!llvm<array<vector>>`. 77More concretely, 78* `vector<4x8x128xf32>` lowers to `!llvm<[4 x [ 8 x < 128 79x float >]]>` (fixed-width vector), and 80* `vector<4x8x[128]xf32>` lowers to `!llvm<[4 x [ 8 x < vscale x 128 81x float >]]>` (scalable vector). 82 83There are tradeoffs involved related to how one can access subvectors and how 84one uses `llvm.extractelement`, `llvm.insertelement` and `llvm.shufflevector`. 85The section on [LLVM Lowering Tradeoffs](#llvm-lowering-tradeoffs) offers a 86deeper dive into the current design choices and tradeoffs. 87 88Note, while LLVM supports arrarys of scalable vectors, these are required to be 89fixed-width arrays of 1-D scalable vectors. This means scalable vectors with a 90non-trailing scalable dimension (e.g. `vector<4x[8]x128xf32`) are not 91convertible to LLVM. 92 93Finally, MLIR takes the same view on scalable Vectors as LLVM (c.f. 94[VectorType](https://llvm.org/docs/LangRef.html#vector-type)): 95> For scalable vectors, the total number of elements is a constant multiple 96> (called vscale) of the specified number of elements; vscale is a positive 97> integer that is unknown at compile time and the same hardware-dependent 98> constant for all scalable vectors at run time. The size of a specific 99> scalable vector type is thus constant within IR, even if the exact size in 100> bytes cannot be determined until run time. 101 102### Hardware Vector Ops 103 104Hardware Vector Ops are implemented as one dialect per target. For internal 105hardware, we are auto-generating the specific HW dialects. For `GPU`, the `NVVM` 106dialect adds operations such as `mma.sync`, `shfl` and tests. For `CPU` things 107are somewhat in-flight because the abstraction is close to `LLVMIR`. The jury is 108still out on whether a generic `CPU` dialect is concretely needed, but it seems 109reasonable to have the same levels of abstraction for all targets and perform 110cost-based lowering decisions in MLIR even for `LLVM`. Specialized `CPU` 111dialects that would capture specific features not well captured by LLVM peephole 112optimizations of on different types that core MLIR supports (e.g. Scalable 113Vectors) are welcome future extensions. 114 115### Virtual Vector Ops 116 117Some existing Arith and Vector Dialect on `n-D` `vector` types comprise: 118 119```mlir 120// Produces a vector<3x7x8xf32> 121%a = arith.addf %0, %1 : vector<3x7x8xf32> 122// Produces a vector<3x7x8xf32> 123%b = arith.mulf %0, %1 : vector<3x7x8xf32> 124// Produces a vector<3x7x8xf32> 125%c = vector.splat %1 : vector<3x7x8xf32> 126 127%d = vector.extract %0[1]: vector<7x8xf32> from vector<3x7x8xf32> 128%e = vector.extract %0[1, 5]: vector<8xf32> from vector<3x7x8xf32> 129%f = vector.outerproduct %0, %1: vector<4xf32>, vector<8xf32> // -> vector<4x8xf32> 130%g = vector.outerproduct %0, %1, %2: vector<4xf32>, vector<8xf32> // fma when adding %2 131 132// Returns a slice of type vector<2x2x16xf32> 133%h = vector.strided_slice %0 134 {offsets = [2, 2], sizes = [2, 2], strides = [1, 1]}: 135 vector<4x8x16xf32> 136 137%i = vector.transfer_read %A[%0, %1] 138 {permutation_map = (d0, d1) -> (d0)}: 139 memref<7x?xf32>, vector<4xf32> 140 141vector.transfer_write %f1, %A[%i0, %i1, %i2, %i3] 142 {permutation_map = (d0, d1, d2, d3) -> (d3, d1, d0)} : 143 vector<5x4x3xf32>, memref<?x?x?x?xf32> 144``` 145 146The list of Vector is currently undergoing evolutions and is best kept track of 147by following the evolution of the 148[VectorOps.td](https://github.com/llvm/llvm-project/blob/main/mlir/include/mlir/Dialect/Vector/IR/VectorOps.td) 149ODS file (markdown documentation is automatically generated locally when 150building and populates the 151[Vector doc](https://github.com/llvm/llvm-project/blob/main/mlir/docs/Dialects/Vector.md)). 152Recent extensions are driven by concrete use cases of interest. A notable such 153use case is the `vector.contract` op which applies principles of the 154StructuredOps abstraction to `vector` types. 155 156### Virtual Vector Rewrite Patterns 157 158The following rewrite patterns exist at the `VV->VV` level: 159 1601. The now retired `MaterializeVector` pass used to legalize ops on a 161 coarse-grained virtual `vector` to a finer-grained virtual `vector` by 162 unrolling. This has been rewritten as a retargetable unroll-and-jam pattern 163 on `vector` ops and `vector` types. 1642. The lowering of `vector_transfer` ops legalizes `vector` load/store ops to 165 permuted loops over scalar load/stores. This should evolve to loops over 166 `vector` load/stores + `mask` operations as they become available `vector` 167 ops at the `VV` level. 168 169The general direction is to add more Virtual Vector level ops and implement more 170useful `VV -> VV` rewrites as composable patterns that the PatternRewrite 171infrastructure can apply iteratively. 172 173### Virtual Vector to Hardware Vector Lowering 174 175For now, `VV -> HWV` are specified in C++ (see for instance the 176[SplatOpLowering for n-D vectors](https://github.com/tensorflow/mlir/commit/0a0c4867c6a6fcb0a2f17ef26a791c1d551fe33d) 177or the 178[VectorOuterProductOp lowering](https://github.com/tensorflow/mlir/commit/957b1ca9680b4aacabb3a480fbc4ebd2506334b8)). 179 180Simple 181[conversion tests](https://github.com/llvm/llvm-project/blob/main/mlir/test/Conversion/VectorToLLVM/vector-to-llvm.mlir) 182are available for the `LLVM` target starting from the Virtual Vector Level. 183 184## Rationale 185 186### Hardware as `vector` Machines of Minimum Granularity 187 188Higher-dimensional `vector`s are ubiquitous in modern HPC hardware. One way to 189think about Generic Retargetable `vector`-Level Dialect is that it operates on 190`vector` types that are multiples of a "good" `vector` size so the HW can 191efficiently implement a set of high-level primitives (e.g. 192`vector<8x8x8x16xf32>` when HW `vector` size is say `vector<4x8xf32>`). 193 194Some notable `vector` sizes of interest include: 195 1961. CPU: `vector<HW_vector_size * k>`, `vector<core_count * k’ x 197 HW_vector_size * k>` and `vector<socket_count x core_count * k’ x 198 HW_vector_size * k>` 1992. GPU: `vector<warp_size * k>`, `vector<warp_size * k x float4>` and 200 `vector<warp_size * k x 4 x 4 x 4>` for tensor_core sizes, 2013. Other accelerators: n-D `vector` as first-class citizens in the HW. 202 203Depending on the target, ops on sizes that are not multiples of the HW `vector` 204size may either produce slow code (e.g. by going through `LLVM` legalization) or 205may not legalize at all (e.g. some unsupported accelerator X combination of ops 206and types). 207 208### Transformations Problems Avoided 209 210A `vector<16x32x64xf32>` virtual `vector` is a coarse-grained type that can be 211“unrolled” to HW-specific sizes. The multi-dimensional unrolling factors are 212carried in the IR by the `vector` type. After unrolling, traditional 213instruction-level scheduling can be run. 214 215The following key transformations (along with the supporting analyses and 216structural constraints) are completely avoided by operating on a `vector` 217`ssa-value` abstraction: 218 2191. Loop unroll and unroll-and-jam. 2202. Loop and load-store restructuring for register reuse. 2213. Load to store forwarding and Mem2reg. 2224. Coarsening (raising) from finer-grained `vector` form. 223 224Note that “unrolling” in the context of `vector`s corresponds to partial loop 225unroll-and-jam and not full unrolling. As a consequence this is expected to 226compose with SW pipelining where applicable and does not result in ICache blow 227up. 228 229### The Big Out-Of-Scope Piece: Automatic Vectorization 230 231One important piece not discussed here is automatic vectorization (automatically 232raising from scalar to n-D `vector` ops and types). The TL;DR is that when the 233first "super-vectorization" prototype was implemented, MLIR was nowhere near as 234mature as it is today. As we continue building more abstractions in `VV -> HWV`, 235there is an opportunity to revisit vectorization in MLIR. 236 237Since this topic touches on codegen abstractions, it is technically out of the 238scope of this survey document but there is a lot to discuss in light of 239structured op type representations and how a vectorization transformation can be 240reused across dialects. In particular, MLIR allows the definition of dialects at 241arbitrary levels of granularity and lends itself favorably to progressive 242lowering. The argument can be made that automatic vectorization on a loops + ops 243abstraction is akin to raising structural information that has been lost. 244Instead, it is possible to revisit vectorization as simple pattern rewrites, 245provided the IR is in a suitable form. For instance, vectorizing a 246`linalg.generic` op whose semantics match a `matmul` can be done 247[quite easily with a pattern](https://github.com/tensorflow/mlir/commit/bff722d6b59ab99b998f0c2b9fccd0267d9f93b5). 248In fact this pattern is trivial to generalize to any type of contraction when 249targeting the `vector.contract` op, as well as to any field (`+/*`, `min/+`, 250`max/+`, `or/and`, `logsumexp/+` ...) . In other words, by operating on a higher 251level of generic abstractions than affine loops, non-trivial transformations 252become significantly simpler and composable at a finer granularity. 253 254Irrespective of the existence of an auto-vectorizer, one can build a notional 255vector language based on the VectorOps dialect and build end-to-end models with 256expressing `vector`s in the IR directly and simple pattern-rewrites. 257[EDSC](https://github.com/llvm/llvm-project/blob/main/mlir/docs/EDSC.md)s 258provide a simple way of driving such a notional language directly in C++. 259 260## Bikeshed Naming Discussion 261 262There are arguments against naming an n-D level of abstraction `vector` because 263most people associate it with 1-D `vector`s. On the other hand, `vector`s are 264first-class n-D values in MLIR. The alternative name Tile has been proposed, 265which conveys higher-D meaning. But it also is one of the most overloaded terms 266in compilers and hardware. For now, we generally use the `n-D` `vector` name and 267are open to better suggestions. 268 269## 0D Vectors 270 271Vectors of dimension 0 (or _0-D vectors_ or _0D vectors_) are allowed inside 272MLIR. For instance, a `f32` vector containing one scalar can be denoted as 273`vector<f32>`. This is similar to the `tensor<f32>` type that is available in 274TensorFlow or the `memref<f32>` type that is available in MLIR. 275 276Generally, a 0D `vector` can be interpreted as a scalar. The benefit of 0D 277`vector`s, `tensor`s, and `memref`s is that they make it easier to lower code 278from various frontends such as TensorFlow and make it easier to handle corner 279cases such as unrolling a loop from 1D to 0D. 280 281## LLVM Lowering Tradeoffs 282 283This section describes the tradeoffs involved in lowering the MLIR n-D vector 284type and operations on it to LLVM-IR. Putting aside the 285[LLVM Matrix](http://lists.llvm.org/pipermail/llvm-dev/2018-October/126871.html) 286proposal for now, this assumes LLVM only has built-in support for 1-D vector. 287The relationship with the LLVM Matrix proposal is discussed at the end of this 288document. 289 290LLVM instructions are prefixed by the `llvm.` dialect prefix (e.g. 291`llvm.insertvalue`). Such ops operate exclusively on 1-D vectors and aggregates 292following the [LLVM LangRef](https://llvm.org/docs/LangRef.html). MLIR 293operations are prefixed by the `vector.` dialect prefix (e.g. 294`vector.insertelement`). Such ops operate exclusively on MLIR `n-D` `vector` 295types. 296 297### Alternatives For Lowering an n-D Vector Type to LLVM 298 299Consider a vector of rank n with static sizes `{s_0, ... s_{n-1}}` (i.e. an MLIR 300`vector<s_0x...s_{n-1}xf32>`). Lowering such an `n-D` MLIR vector type to an 301LLVM descriptor can be done by either: 302 3031. Nested aggregate type of `1-D` vector: 304 `!llvm."[s_0x[s_1x[...<s_{n-1}xf32>]]]">` in the MLIR LLVM dialect (current 305 lowering in MLIR). 3062. Flattening to a `1-D` vector: `!llvm<"(s_0*...*s_{n-1})xfloat">` in the MLIR 307 LLVM dialect. 3083. A mix of both. 309 310There are multiple tradeoffs involved in choosing one or the other that we 311discuss. It is important to note that “a mix of both” immediately reduces to 312“nested aggregate type of 1-D vector” with a `vector.cast %0: 313vector<4x8x16x32xf32> to vector<4x4096xf32>` operation, that flattens the most 314"k" minor dimensions. 315 316### Constraints Inherited from LLVM (see LangRef) 317 318The first constraint was already mentioned: LLVM only supports `1-D` `vector` 319types natively. Additional constraints are related to the difference in LLVM 320between vector and 321[aggregate types](https://llvm.org/docs/LangRef.html#aggregate-types): 322> Aggregate Types are a subset of derived types that can contain multiple 323> member types. Arrays and structs are aggregate types. Vectors are not 324> considered to be aggregate types. 325 326This distinction is also reflected in some of the operations. For `1-D` vectors, 327the operations `llvm.extractelement`, `llvm.insertelement`, and 328`llvm.shufflevector` apply, with direct support for dynamic indices. For `n-D` 329vectors with `n>1`, and thus aggregate types at LLVM level, the more restrictive 330operations `llvm.extractvalue` and `llvm.insertvalue` apply, which only accept 331static indices. There is no direct shuffling support for aggregate types. 332 333The next sentence (cf. LangRef [structure 334type](https://llvm.org/docs/LangRef.html#structure-type)) illustrates a 335recurrent tradeoff, also found in MLIR, between 336“value types” (subject to SSA use-def chains) and “memory types” (subject to 337aliasing and side-effects): 338> Structures in memory are accessed using ‘load’ and ‘store’ by getting a 339> pointer to a field with the llvm.getelementptr instruction. Structures in 340> registers are accessed using the llvm.extractvalue and llvm.insertvalue 341> instructions. 342 343When transposing this to MLIR, `llvm.getelementptr` works on pointers to `n-D` 344vectors in memory. For `n-D`, vectors values that live in registers we can use 345`vector.extract` and `vector.insert` which do not accept dynamic indices. Note 346that this is consistent with hardware considerations as discussed below. 347 348An alternative is to use an LLVM `1-D` `vector` type for which one can use 349`llvm.extractelement`, `llvm.insertelement` and `llvm.shufflevector`. These 350operations accept dynamic indices. The implication is that one has to use a 351flattened lowering of an MLIR n-D vector to an LLVM 1-D vector. 352 353There are multiple tradeoffs involved that mix implications on the programming 354model, execution on actual HW and what is visible or hidden from codegen. They 355are discussed in the following sections. 356 357### Nested Aggregate 358 359Pros: 360 3611. Natural encoding n-D vector -> (n-1)-D aggregate over 1-D vector. 3622. No need for linearization / delinearization logic inserted everywhere. 3633. `llvm.insertvalue`, `llvm.extractvalue` of `(n-k)-D` aggregate is natural. 3644. `llvm.insertelement`, `llvm.extractelement`, `llvm.shufflevector` over `1-D` 365 vector type is natural. 366 367Cons: 368 3691. `llvm.insertvalue` / `llvm.extractvalue` does not accept dynamic indices but 370 only static ones. 3712. Dynamic indexing on the non-most-minor dimension requires roundtrips to 372 memory. 3733. Special intrinsics and native instructions in LLVM operate on `1-D` vectors. 374 This is not expected to be a practical limitation thanks to a `vector.cast 375 %0: vector<4x8x16x32xf32> to vector<4x4096xf32>` operation, that flattens 376 the most minor dimensions (see the bigger picture in implications on 377 codegen). 378 379### Flattened 1-D Vector Type 380 381Pros: 382 3831. `insertelement` / `extractelement` / `shufflevector` with dynamic indexing 384 is possible over the whole lowered `n-D` vector type. 3852. Supports special intrinsics and native operations. 386 387Cons: 388 3891. Requires linearization/delinearization logic everywhere, translations are 390 complex. 3912. Hides away the real HW structure behind dynamic indexing: at the end of the 392 day, HW vector sizes are generally fixed and multiple vectors will be needed 393 to hold a vector that is larger than the HW. 3943. Unlikely peephole optimizations will result in good code: arbitrary dynamic 395 accesses, especially at HW vector boundaries unlikely to result in regular 396 patterns. 397 398### Discussion 399 400#### HW Vectors and Implications on the SW and the Programming Model 401 402As of today, the LLVM model only support `1-D` vector types. This is 403unsurprising because historically, the vast majority of HW only supports `1-D` 404vector registers. We note that multiple HW vendors are in the process of 405evolving to higher-dimensional physical vectors. 406 407In the following discussion, let's assume the HW vector size is `1-D` and the SW 408vector size is `n-D`, with `n >= 1`. The same discussion would apply with `2-D` 409HW `vector` size and `n >= 2`. In this context, most HW exhibit a vector 410register file. The number of such vectors is fixed. Depending on the rank and 411sizes of the SW vector abstraction and the HW vector sizes and number of 412registers, an `n-D` SW vector type may be materialized by a mix of multiple 413`1-D` HW vector registers + memory locations at a given point in time. 414 415The implication of the physical HW constraints on the programming model are that 416one cannot index dynamically across hardware registers: a register file can 417generally not be indexed dynamically. This is because the register number is 418fixed and one either needs to unroll explicitly to obtain fixed register numbers 419or go through memory. This is a constraint familiar to CUDA programmers: when 420declaring a `private float a[4]`; and subsequently indexing with a *dynamic* 421value results in so-called **local memory** usage (i.e. roundtripping to 422memory). 423 424#### Implication on codegen 425 426MLIR `n-D` vector types are currently represented as `(n-1)-D` arrays of `1-D` 427vectors when lowered to LLVM. This introduces the consequences on static vs 428dynamic indexing discussed previously: `extractelement`, `insertelement` and 429`shufflevector` on `n-D` vectors in MLIR only support static indices. Dynamic 430indices are only supported on the most minor `1-D` vector but not the outer 431`(n-1)-D`. For other cases, explicit load / stores are required. 432 433The implications on codegen are as follows: 434 4351. Loops around `vector` values are indirect addressing of vector values, they 436 must operate on explicit load / store operations over `n-D` vector types. 4372. Once an `n-D` `vector` type is loaded into an SSA value (that may or may not 438 live in `n` registers, with or without spilling, when eventually lowered), 439 it may be unrolled to smaller `k-D` `vector` types and operations that 440 correspond to the HW. This level of MLIR codegen is related to register 441 allocation and spilling that occur much later in the LLVM pipeline. 4423. HW may support >1-D vectors with intrinsics for indirect addressing within 443 these vectors. These can be targeted thanks to explicit `vector_cast` 444 operations from MLIR `k-D` vector types and operations to LLVM `1-D` 445 vectors + intrinsics. 446 447Alternatively, we argue that directly lowering to a linearized abstraction hides 448away the codegen complexities related to memory accesses by giving a false 449impression of magical dynamic indexing across registers. Instead we prefer to 450make those very explicit in MLIR and allow codegen to explore tradeoffs. 451Different HW will require different tradeoffs in the sizes involved in steps 1., 4522. and 3. 453 454Decisions made at the MLIR level will have implications at a much later stage in 455LLVM (after register allocation). We do not envision to expose concerns related 456to modeling of register allocation and spilling to MLIR explicitly. Instead, 457each target will expose a set of "good" target operations and `n-D` vector 458types, associated with costs that `PatterRewriters` at the MLIR level will be 459able to target. Such costs at the MLIR level will be abstract and used for 460ranking, not for accurate performance modeling. In the future such costs will be 461learned. 462 463#### Implication on Lowering to Accelerators 464 465To target accelerators that support higher dimensional vectors natively, we can 466start from either `1-D` or `n-D` vectors in MLIR and use `vector.cast` to 467flatten the most minor dimensions to `1-D` `vector<Kxf32>` where `K` is an 468appropriate constant. Then, the existing lowering to LLVM-IR immediately 469applies, with extensions for accelerator-specific intrinsics. 470 471It is the role of an Accelerator-specific vector dialect (see codegen flow in 472the figure above) to lower the `vector.cast`. Accelerator -> LLVM lowering would 473then consist of a bunch of `Accelerator -> Accelerator` rewrites to perform the 474casts composed with `Accelerator -> LLVM` conversions + intrinsics that operate 475on `1-D` `vector<Kxf32>`. 476 477Some of those rewrites may need extra handling, especially if a reduction is 478involved. For example, `vector.cast %0: vector<K1x...xKnxf32> to vector<Kxf32>` 479when `K != K1 * … * Kn` and some arbitrary irregular `vector.cast %0: 480vector<4x4x17xf32> to vector<Kxf32>` may introduce masking and intra-vector 481shuffling that may not be worthwhile or even feasible, i.e. infinite cost. 482 483However `vector.cast %0: vector<K1x...xKnxf32> to vector<Kxf32>` when `K = K1 * 484… * Kn` should be close to a noop. 485 486As we start building accelerator-specific abstractions, we hope to achieve 487retargetable codegen: the same infra is used for CPU, GPU and accelerators with 488extra MLIR patterns and costs. 489 490#### Implication on calling external functions that operate on vectors 491 492It is possible (likely) that we additionally need to linearize when calling an 493external function. 494 495### Relationship to LLVM matrix type proposal. 496 497The LLVM matrix proposal was formulated 1 year ago but seemed to be somewhat 498stalled until recently. In its current form, it is limited to 2-D matrix types 499and operations are implemented with LLVM intrinsics. In contrast, MLIR sits at a 500higher level of abstraction and allows the lowering of generic operations on 501generic n-D vector types from MLIR to aggregates of 1-D LLVM vectors. In the 502future, it could make sense to lower to the LLVM matrix abstraction also for CPU 503even though MLIR will continue needing higher level abstractions. 504 505On the other hand, one should note that as MLIR is moving to LLVM, this document 506could become the unifying abstraction that people should target for 1-D vectors 507and the LLVM matrix proposal can be viewed as a subset of this work. 508 509### Conclusion 510 511The flattened 1-D vector design in the LLVM matrix proposal is good in a 512HW-specific world with special intrinsics. This is a good abstraction for 513register allocation, Instruction-Level-Parallelism and 514SoftWare-Pipelining/Modulo Scheduling optimizations at the register level. 515However MLIR codegen operates at a higher level of abstraction where we want to 516target operations on coarser-grained vectors than the HW size and on which 517unroll-and-jam is applied and patterns across multiple HW vectors can be 518matched. 519 520This makes “nested aggregate type of 1-D vector” an appealing abstraction for 521lowering from MLIR because: 522 5231. it does not hide complexity related to the buffer vs value semantics and the 524 memory subsystem and 5252. it does not rely on LLVM to magically make all the things work from a too 526 low-level abstraction. 527 528The use of special intrinsics in a `1-D` LLVM world is still available thanks to 529an explicit `vector.cast` op. 530 531## Operations 532 533[include "Dialects/VectorOps.md"] 534